Mar 20 2020

Published by

NYU Shanghai

According to the World Health Organization, at least 2.2 billion people around the world are impacted by vision impairment or blindness caused by eye diseases, such as glaucoma, cataract, and age-related macular degeneration. Visual-field maps (VFMs) that assess a patient’s central and peripheral vision are used routinely by clinicians to diagnose and manage these eye diseases.

Current technologies for VFM assessments, however, are often inaccurate and imprecise, impeding the diagnosis and treatment of eye diseases. Lu Zhong-Lin, NYU Shanghai’s Chief Scientist and Associate Provost for Sciences, and his collaborators, Xu Pengjing and Yu Deyue from The Ohio State University, and Luis Andres Lesmes from Adaptive Sensory Technology, Inc., have developed a new method, called qVFM, that will enable clinicians to assess VFMs more accurately and precisely while ensuring high efficiency. The study was published in December, 2019 in one of the leading journals in vision science, Journal of Vision.

Currently, the gold standard VFM assessment in clinical ophthalmic diagnostics is the standard automated perimetry (SAP), which measures light sensitivity across different retinal locations to provide a light sensitivity VFM. However, the technology’s precision is far from ideal. For example, in one Ocular Hypertension Treatment Study, 85.9% of the abnormal perimetry results were not verified in a repeated test.

Standard Automated Perimetry (SAP)

“We can think of each VFM instrument as a ruler,” Lu said. “SAP is like a ruler calibrated down to the decimeter scale, which means there will be a lot of inaccuracies when measuring objects on the millimeter scale. That’s why we need to create a more accurate tool that is calibrated at a finer scale, and able to register smaller changes. Only when we use this more accurate ruler can we measure whether treatment is going in the right direction.”

The qVFM method developed by Lu and his team uses a Bayesian active learning algorithm to improve test accuracy and precision while saving time. The algorithm actively learns from the responses of the patient and determines the optimal test stimulus (e.g., its retinal location and light intensity or contrast) in each trial for each individual. It has three modules: a global module for preliminary assessment of the shape of the VFM, a local module for assessing individual areas of the visual-field, and a switch module for determining when to switch from using the global module to using the local module.

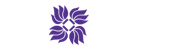

In the global module, the shape of the VFM is modeled as a tilted elliptic paraboloid function with five parameters (TEPF, shown in the image below). By actively learning the five parameters of the function from the trial-by-trial responses of the patient, the qVFM provides a rough estimate of overall shape of the VFM.

Simulated visual-field map and tilted elliptic paraboloid function. The left panel shows the visual-field map of a simulated left eye with a blind spot. The right panel shows the corresponding tilted elliptic paraboloid function without the blind spot.

The switch module evaluates the rate of information gain on the shape of the VFM in the global module and switches to the local module when further testing no longer improves the precision of the TEPF rapidly.

The local module uses the results from the global module to generate the initial estimate of the visual function (e.g., light sensitivity) in each retinal location. It then uses another Bayesian active learning algorithm to further improve the precision of the estimated visual function in each retinal location.

The research team validated the method by conducting a series of simulations and a psychophysical experiment. They compared the performance of the full qVFM method with a reduced qVFM procedure that only consisted of the local module in order to illustrate the power of the entire procedure.

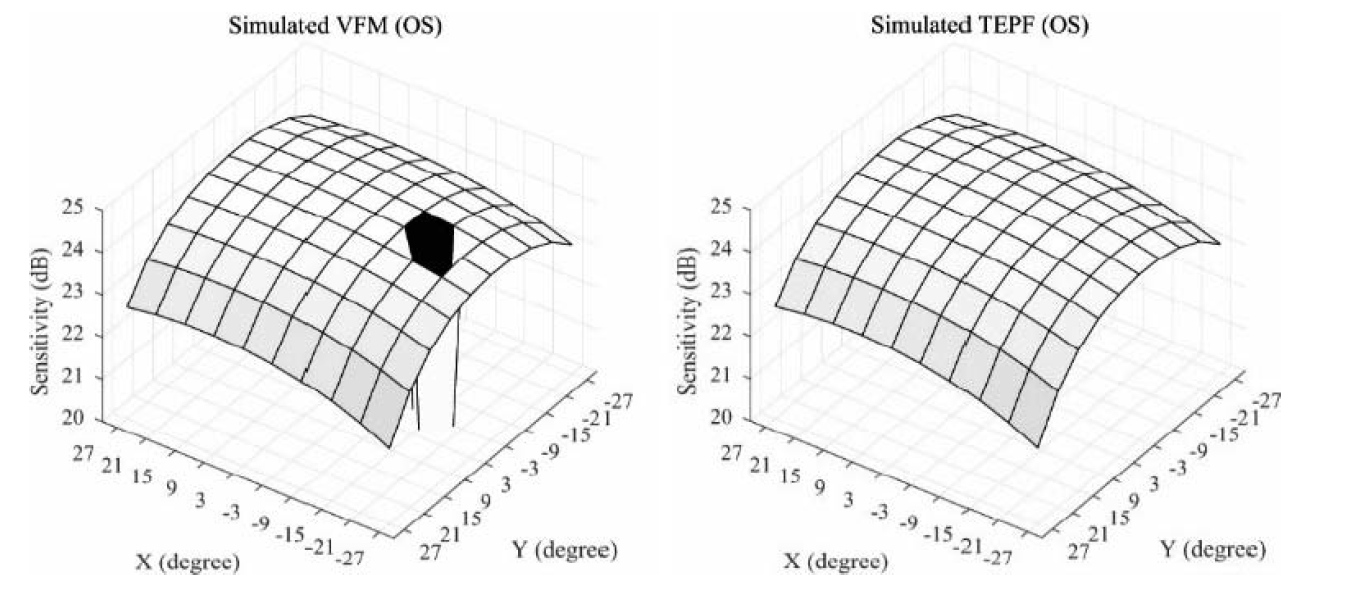

Researchers created simulated “observers” and preset the simulations to have normal vision. The “observers” viewed the computer display with one eye, and were cued to report the presence or absence of the target (a light disc) in one of 100 retinal locations. In the experiment, six human subjects were cued to report the presence or absence of a target stimulus in one of 100 retinal locations, with the light intensity and location of the target adaptively selected by the qVFM method in each trial.

In the experiment, subjects were asked to fixate on the center of the display and report whether the light disc presented at the center of the location cue (circle).

Both the simulations and the experiment showed that the qVFM method is able to provide accurate and precise visual field mapping of light sensitivity. The full qVFM procedure worked at approximately twice the speed of the reduced procedure with only the local module. This method can be extended to map other visual functions to monitor vision loss, evaluate therapeutic interventions, and develop effective rehabilitation treatment for low vision.

Lu says he does not plan to limit his research to the mapping of light sensitivity. “The ultimate goal of studying visual function is to predict visual performance in daily life,” says Lu. “Our research should consider real situations in which people rely on their vision, such as reading and driving.”

To this end, Lu says the team has already conducted research to explore how accuracy, precision, and efficiency can be improved in the measurement of contrast sensitivity visual-field map, the visual ability to distinguish an object from its background. They are also exploring how the VFMs are related to driving behavior.

In addition, the team is conducting a series of studies to explore better practices in visual function measurement, testing the qVFM method on patients with eye disorders, instead of on subjects with healthy vision, to ensure its effectiveness in mapping the visual fields of individuals with damaged vision.

Instead of asking test subjects to respond to stimuli by pressing buttons, the team has also developed a procedure based on eye tracking in which subjects can indicate the location of the target stimuli by moving their eyes.

The qVFM method has been disclosed in a PCT patent application. There are also active discussions on commercializing the technology.