Jan 14 2022

Published by

NYU Shanghai

When you walk, how do you know how fast you are moving, or even whether you’re moving at all? And when you are moving, how can you tell when other objects in your environment are moving too?

Although perceiving motion is something that most people take for granted, it actually requires the processing of a great deal of visual and non-visual information, entailing complicated calculations by the brain. Now, a grant from the Joint Sino-German Research Program -- funded by the National Natural Science Foundation of China (NSFC) and the German Research Foundation (DFG) -- will back Professor of Neural Science and Psychology Li Li and research partner Markus Lappe of the University of Münster’s investigation into the interaction between self-motion perception and moving object perception.

Their collaboration is one of 18 selected for the 2021 Joint Sino-German Research Program from among nearly 150 applicant projects spanning Chemistry, Life Sciences, Management Sciences, and Medicine. Research supported by the three-year 2 million RMB grant (direct cost) will begin formally this month.

Li and Lappe’s investigation will combine lab research with human subjects and advanced computational modelling to investigate two interacting but opposing questions that neuroscientists have long struggled to answer: How do the human brain and visual system combine visual and non-visual information to perceive self-motion in the presence of independent objects in motion? And when humans are in motion, how do they perceive the motion of other moving objects in their surroundings?

“Answers to these two questions are crucial to understanding key abilities for human survival, such as navigation and locomotion in different environments,” said Li. “Only one previously published paper has tried to address these two questions at the same time. Our research aims to dig deeply into how the brain integrates multi-modal information to simultaneously perceive both self-motion and independent object motion during the self-motion process.”

A sample of the visual displays that participants in the experiments see and then make a judgment about self-motion and independent object motion.

The lead investigators bring together a wealth of experience in different neuroscience research approaches, combining Li’s two decades of lab work in optic flow and human locomotion abilities with Lappe’s nearly three decades of experience in computational modeling of optic flow and motion perception, including virtual reality studies of visually guided walking.

“We need computational modeling to guide experimentation and advance experiment design, because the perception of self-motion is affected by many factors such as rotational and translational motion, the depth of the field, the velocity of object motion, non-visual information, etc.,” Li said. “Since these numerous parameters interact with each other in complex ways, computational modeling will help us identify these interactions, interpret experimental data, and find the best visual stimuli for specific research questions. On the other hand, the results of the experimental data are important to the advancement of computational modeling, in order to verify and improve the model’s accuracy and determine its parameters.”

The pair’s approach combines research techniques that span neuroscience, computer science and engineering. According to Li, that interdisciplinary approach mirrors the broad range of applications that their findings could impact, including technologies like computer vision, drone and self-driving vehicles, as well as flight simulators and other virtual reality occupational training programs.

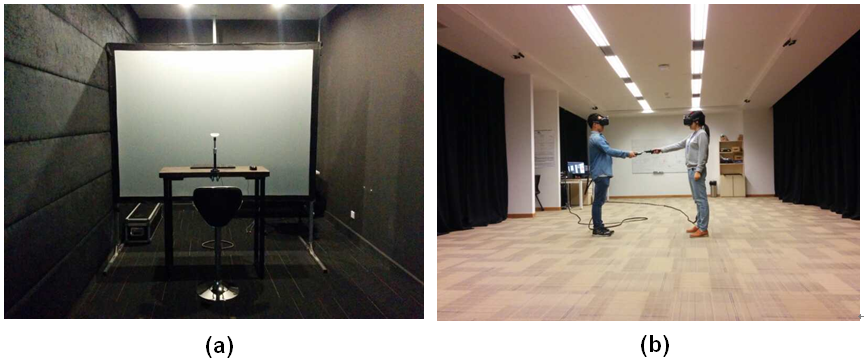

Left: Li’s Perception and Visuomotor Control Lab; Right: Perception and Action Virtual Reality Lab at NYU Shanghai.

Computational modeling will be mainly carried out by the research team at the University of Münster in Germany, led by Lappe, while experiment data will primarily be collected in two laboratories managed by Li, the Perception and Action Virtual Reality Lab at NYU Shanghai and the Perception and Visuomotor Control Lab at the NYU-ECNU Institute of Brain and Cognitive Science at NYU Shanghai. Li’s team will include NYU Shanghai Postdoctoral Fellow of Neuroscience Chen Jing, NYU Shanghai Ph.D. candidate in Neural Science ShanZhou Kuidong, and graduate students from the NYU Shanghai-ECNU Joint Graduate Training Program (NET) in Neural Science.

Both the Chinese and German labs have set up nearly parallel facilities so that all experimentation and computational modelling can be reviewed and honed simultaneously.