Sep 28 2022

Published by

NYU Shanghai

By inspecting X-ray images, radiologists are able to perform medical diagnoses. By watching satellite images, forecasters can predict the weather. These are not “superpowers” of course - it’s the result of years of perceptual learning, a phenomenon of performance improvements in perceptual tasks after targeted practice or training.

In a recent review article titled “Current Directions in Visual Perceptual Learning,” NYU Shanghai Chief Scientist and Professor of Neuroscience Zhong-Lin Lu and his long-term collaborator Barbara Anne Dosher, Professor of Cognitive Sciences at the University of California, Irvine, systematically examined research development, theories, models and applications of visual perceptual learning over the past 30 years. They also pointed out existing gaps in the field to inspire future research and collaboration. The study was published in a newly established e-journal Nature Reviews Psychology.

Research over the past 30 years has extensively documented perceptual learning in almost all visual tasks, ranging from simple feature detection to complex scene analysis, as well as its contributions to functional improvements during development, aging, and visual rehabilitation. “As perceptual performance can be so significantly altered by perceptual learning, a complete understanding of perception requires an understanding of how it is modified by perceptual learning,” said Lu.

Focusing on key behavioral aspects of visual perceptual learning, the study classified and elaborated on different kinds and levels of learning tasks. Then it discussed “specificity” - a hallmark characteristic of perceptual learning found in lab experiments back to the late 1990s. Specificity refers to the phenomenon that visual performance improvements from training in one task usually cannot be generalized to others, even with the same task in a different setting. It has been a hot topic in the field, as scientists try to figure out how specificity happens, and how it can be avoided to achieve transfer and generalization of improvements between different learning tasks. Two theories were discussed in the study, “representation enhancement” and “information reweighting,” with information reweighting as the dominant mechanism of perceptual learning.

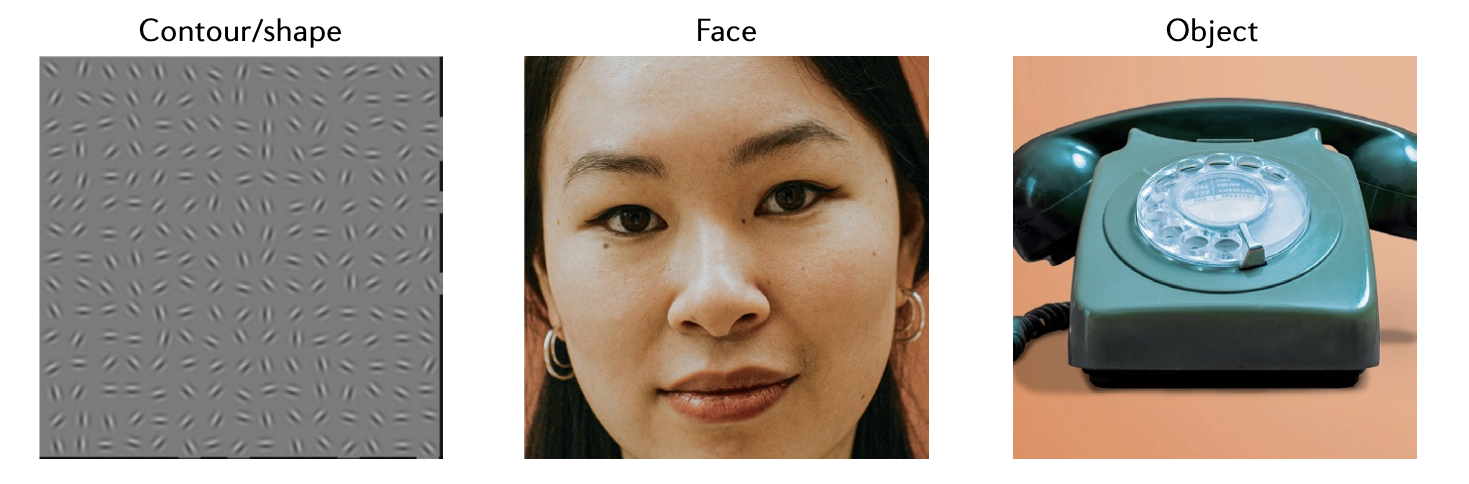

Examples of visual perceptual learning tasks: contour/shape detection, face recognition and object recognition

Examples of visual perceptual learning tasks: contour/shape detection, face recognition and object recognition

“Despite the tremendous progress made in our understanding of visual perceptual learning over the last three decades, there are still many unanswered questions,” said Lu. Four major research directions were proposed in the review: generating better measurements to assess learnings in the tasks, exploring optimal training to maximize performance improvements, establishing brain network models that are more realistic and expanding perceptual learning studies in children.

“Our goal was to have an integrated article that can connect different branches of researchers in perceptual learning and facilitate collaborations among them,” said Lu. “As I believe there’s great value for scientists to learn from each other’s work. For example, as I mentioned in the study, though possessing differences, recent progress made in deep convolutional neural networks for machine learning may shed light on specificity in human perceptual learning, and vice versa. But the precondition for promoting cooperation across fields is a mutual understanding of each other’s work.”

Building upon this study, Lu is continuing his research in developing technologies for precise assessment of learning curves in visual perceptual learning and further establishing computational models to account for the mechanisms of perceptual learning. “Another focus of my work is on extending research to real-world applications, hoping to find better treatments for diseases like myopia and amblyopia,” he said.